OpenStack Client Tools

Welcome to the world of next generation OpenStack client tools written in Rust.

As a programming language Rust is getting more and more traction in the low level programming. It has very unique programming safety features what makes it a very good fit in a complex world of OpenStack. As a compiled language it is also a very good fit for the CLI tools allowing users to escape the python dependency issues. In the containerization era placing a small size binary is so much easier.

Current focus of the project is at introducing Rust as a programming language into the ecosystem of OpenStack user facing tooling and provides SDK as well as CLI and TUI.

Design principles

After long time maintaining OpenStack client facing tools it became clear that it cannot continue the same way as before. OpenStack services are very different and are not behaving similar to each other. There were multiple attempts to standardize APIs across services, but it didn't worked. Then there were attempts to try to standardize services on the SDK level. This is partially working, but require a very high implementation effort and permanent maintenance. Reverse-engineering service API by looking at the API-REF documentation is very time consuming. A huge issue is also that API-REF is being a human-written document that very often diverges from the code what leads to the issues when covering those resources in SDK/CLI. Tracking the API evolving is another aspect of the maintenance effort.

As a solution a completely different approach has been chosen to reduce maintenance effort while at the same time guaranteeing that API bindings match to what service is supporting in reality. Instead of human reading the API-REF written by another human who maybe was involved in the implementation of the feature OpenAPI specs is being chosen as a source of truth. Since such specs were also not existing and multiple attempts to introduce OpenAPI in OpenStack failed the process was restarted again. Currently there is a lot of work happening in OpenStack to produce specs for majority of the services. Main component responsible for that is codegenerator. Apart of inspecting source code of the selected OpenStack services it is also capable of generating tools in this repository. There is of course a set of the framework code, but the REST API wrapping and commands implementation is fully generated.

At the end of the day it means that there is no need to touch the generated code at all. Once resources available in the OpenAPI spec of the service are being initially integrated into the subprojects here they become maintained by the generator. New features added into the resource by the service would be automatically updated once OpenAPI spec is being updated.

Generating code from the OpenAPI has another logical consequence: generated code is providing the same features as the API itself. So if API is doing thing not very logical the SDK/CLI will do it in the same way. Previously it was always landing on the shoulders of SDK/CLI maintainers to try to cope with it. Now if API is bad - API author is to blame.

- Code being automatically generated from OpenAPI specs of the service APIs.

- Unix philosophy: "do one thing well". Every resource/command coverage tries to focus only on the exact API. Combination of API calls is not in scope of the generated code. "Simple is better then complex" (The Zen of Python).

- SDK/CLI bindings are wrapping the API with no additional guessing or normalization.

- User is in full control of input and output. Microversion X.Y has a concrete body schema and with no faulty merges between different versions.

Components

openstack_sdk- SDKopenstack_cli- The new and shiny CLI for OpenStackopenstack_tui- Text (Terminal) User Interfacestructable_derive- Helper crate for having Output in some way similar to old OpenStackClient

Trying out

Install binary

It is possible to install compiled version from the GitHub releases. It comes with a dedicated installer in every release and can be retrieved with the following command:

curl --proto '=https' --tlsv1.2 -LsSf https://github.com/gtema/openstack/releases/download/openstack_cli-v0.8.2/openstack_cli-installer.sh | sh

TUI can be installed similarly:

curl --proto '=https' --tlsv1.2 -LsSf https://github.com/gtema/openstack/releases/download/openstack_tui-v0.1.6/openstack_tui-installer.sh | sh

Build locally

Alternatively it is possible to compile project from sources. Since the project

is a pure Rust it requires having a Rust compile suite.

cargo b

Run

Once the binary is available just start playing with it. If you already have

your clouds.yaml config file from python-openstackclient you are free to

go:

osc --help

osc --os-cloud devstack compute flavor list

Authentication and authorization

Understanding authentication in OpenStack is far away from being a trivial task. In general authentication and authorization are different things, but they are mixed into 1 API request. When trying to authenticate user is passing identification data (username and password or similar) together with the requested authorization (project scope, domain scope or similar). As a response to this API request a session Token is being returned to the user that needs to be always sent with any following request. When authorization scope need to be changed (i.e. perform API in a scope of a different project) a re-authorization need to be performed. That may be done with the same user identification data or using existing session token.

Existing python based OpenStack tools are keeping only one active session going through re-authorization whenever required. There is a support for the token caching which is bound to the used authorization request. When a new session is requested a search (when enabled) is being performed in the cache and a matching token is being returned which is then retried. When MFA or SSO are being used this process is introducing a very ugly user experience forcing user to re-entrer verification data every time a new scope is being requested with a new session.

In this project authentication and authorization in user facing applications is handled differently. Auth caching to the file system is enabled by default. User identification data (auth_url + user name + user domain name) is combined into the hash which is then used as a 1st level caching key and points to the hashmap of authorization data and corresponding successful authorization response data. A key in the 2nd level is a hash calculated from the scope information and a value is a returned token with catalog and expiration information. This way of handling information allows to immediately retrieve valid auth information for the requested scope if it already exists (which may be even shared between processes) or reuse valid authentication data to get new valid requested authorization saving user from need to re-process MFA or SSO requirements.

Configuration

Rust based tools support typical

clouds.yaml/secure.yaml

files for configuration.

Using traditional configuration files may become challenging when many

connections need to be configured. For example there might be few lists of

connections from different providers, some of them may be distributed through

source control. It is possible to simplify such situation and let them be merged

together into the single "virtual" configuration instead of explicitly

specifying them as runtime parameters. The OS_CLIENT_CONFIG_PATH environment

variable can point at a single file or list of files and directories.

export OS_CLIENT_CONFIG_PATH=./clouds.yaml:~/.config/clouds1.yaml:~/.config/clouds2.yaml:~/.config/secure.yaml:~/.config/openstack/"

Being set like that every tool of the project will merge all individual elements

together. When an entry is a directory traditionally clouds.yaml/secure.yaml

files are being searched in the directory and merged into the resulting

configuration.

Most authentication methods support interactive data provisioning. When certain

required auth attributes are not provided in the configuration file or through

the supported cli arguments (or environment variables) clients that implement

AuthHelper interface can receive such data from the user. For example when

using the cli a prompt will appear. In the tui a popup requests the user input.

Authentication methods

Currently only a subset of all possible authentication methods is covered with the work on adding further method ongoing

v3Token

A most basic auth method is an API token (X-Auth-Token). In the clouds.yaml

this requires setting auth_type to one of the [v3token, token]. The token

itself should be specified in the token attribute.

v3Password

A most common auth method is a username/password. In the clouds.yaml this

requires setting auth_type to one of the [v3password, password] or

leaving it empty.

Following attributes specify the authentication data:

username- The user nameuser_id- The user IDpassword- The user passworduser_domain_name- The name of the domain user belongs touser_domain_id- The ID of the domain user belongs to

It is required to specify username or user_id as well as user_domain_name

or user_domain_id.

v3Totp

Once user login is protected with the MFA a OTP token must be specified. It is

represented as passcode, but it not intended to be used directly in the clouds.yaml

v3Multifactor

A better way to handle MFA is by using a v3multifactor auth type. In this

case configuration looks a little bit different:

auth_type=v3multifactorauth_methodsis a list of individualauth_types combined in the authentication flow (i.e['v3password', 'v3totp'])

When a cloud connection is being established in an interactive mode and server responds that it require additional authentication methods those would be processed based on the available data.

v3WebSso

An authentication method that is getting more a more popular is a Single Sign On using remote Identity Data Provider. This flow requires user to authenticate itself in the browser by the IDP directly. It is required to provide following data in the configuration in order for this mode to be used:

auth_type=v3webssoidentity_provider- identity provider as configured in the Keystoneprotocol- IDP protocol as configured in the Keystone

Note: This authentication type only works in the interactive mode. That

means in the case of the CLI that there must be a valid terminal (echo foo | osc identity user create will not work)

v3ApplicationCredential

Application credentials provide a way to delegate a user’s authorization to an application without sharing the user’s password authentication. This is a useful security measure, especially for situations where the user’s identification is provided by an external source, such as LDAP or a single-sign-on service. Instead of storing user passwords in config files, a user creates an application credential for a specific project, with all or a subset of the role assignments they have on that project, and then stores the application credential identifier and secret in the config file.

Multiple application credentials may be active at once, so you can easily rotate application credentials by creating a second one, converting your applications to use it one by one, and finally deleting the first one.

Application credentials are limited by the lifespan of the user that created them. If the user is deleted, disabled, or loses a role assignment on a project, the application credential is deleted.

Required configuration:

auth_type=v3applicationcredentialapplication_credential_secret- a secret part of the application credentialapplication_credential_id- application credential identityapplication_credential_name- application credential name. Note: It is required to specify user data when using application credential nameuser_id- user ID whenapplication_credential_nameis useduser_name- user name whenapplication_credential_nameis useduser_domain_id- User domain ID whenapplication_credential_nameis useduser_domain_name- User domain ID whenapplication_credential_nameis used

Either application_credential_id is required or application_credential_name

in which case additionally the user information is required.

v3OidcAccessToken

Authentication with the OIDC access token is supported in the same way like it is done by the python OpenStack tools.

Required configuration:

auth_type=v3oidcaccesstoken(oroidaccesstoken)identity_provider_id- an identity provider idprotocol- identity provider protocol (usuallyoidc)access_token- Access token of the received from the remote identity provider.

Caching

As described above in difference to the Python OpenStack tooling authentication

caching is enabled by default. It can be disabled using cache.auth: false in

the clouds.yaml.

Data is cached locally in the ~/.osc folder. It is represented by set of

files where file name is constructed as a hash of authentication information

(discarding sensitive data). Content of the file is a serialized map of

authorization data (scope) with the token information (catalog, expiration,

etc).

Every time a new connection need to be established first a search in the cache is performed to find an exact match using supplied authentication and authorization information. When there is no usable information (no information at all or cached token is already expired) a search is performed for any valid token ignoring the scope (authz). When a valid token is found in the cache it is used to obtain a new authorization with required scope. Otherwise a new authentication is being performed.

API operation mapping structure

Python based OpenStack API binding tools are structured on a resource base, where every API resource is a class/object having multiple methods for the resource CRUD and other operations. Moreover microversion differences are also being dealt inside this single object. This causes method typing being problematic and not definite (i.e. when create and get operations return different structures or microversions require modified types).

Since Rust is a strongly typed programming language the same approach is not going to work (neither this approach proved to be a good one). Every unique API call (url + method + payload type) is represented by a dedicated module (simple Enum are still mapped into same module). All RPC-like actions of OpenStack services are also represented by a dediated module. Also when the operation supports different request body schemas in different microversions it is also implemented by a dedicated module. This gives user a better control by using an object with definite constraints explicitly declaring support of a certain microversion.

OpenStack API bindings (SDK)

Every platform API requires SDK bindings to various programming languages.

OpenStack API bindings for Rust are not an exception. The OpenStack comes with

openstack_sdk crate providing an SDK with both synchronous and asynchronous

interfaces.

API bindings are generated from the OpenAPI specs of corresponding services. That means that those are only wrapping the API and usually not providing additional convenience features. The major benefit is that no maintenance efforts are required for the code being generated. Once OpenStack service updates OpenAPI spec for the integrated resources the changes would be immediately available on the next regeneration.

Features

- Sync and Async interface

Query,FindandPaginationinterfaces implementing basic functionalityRawQueryinterface providing more control over the API invocation with upload and download capabilities.- Every combination of URL + http method + body schema is represented by a dedicated module

- User is in charge of return data schema.

Structure

Every single API call is represented by a dedicated module with a structure

implementing REST Endpoint interface. That means that a GET operation is a

dedicated implementation compared to a POST operation. Like described in the

Structure document every RPC-like action and every microversion

is implemented with a single module.

Using

The simplest example demonstrating how to list compute flavors:

#![allow(unused)] fn main() { use openstack_sdk::api::{paged, Pagination, QueryAsync}; use openstack_sdk::{AsyncOpenStack, config::ConfigFile, OpenStackError}; use openstack_sdk::types::ServiceType; use openstack_sdk::api::compute::v2::flavor::list; async fn list_flavors() -> Result<(), OpenStackError> { // Get the builder for the listing Flavors Endpoint let mut ep_builder = list::Request::builder(); // Set the `min_disk` query param ep_builder.min_disk("15"); let ep = ep_builder.build().unwrap(); let cfg = ConfigFile::new().unwrap(); // Get connection config from clouds.yaml/secure.yaml let profile = cfg.get_cloud_config("devstack").unwrap().unwrap(); // Establish connection let mut session = AsyncOpenStack::new(&profile).await?; // Invoke service discovery when desired. session.discover_service_endpoint(&ServiceType::Compute).await?; // Execute the call with pagination limiting maximum amount of entries to 1000 let data: Vec<serde_json::Value> = paged(ep, Pagination::Limit(1000)) .query_async(&session) .await.unwrap(); println!("Data = {:?}", data); Ok(()) } }

Documentation

Current crate documentation is known to be very poor. It will be addressed in future, but for now the best way to figure out how it works is to look at openstack_cli and openstack_tui using it.

Crate documentation is published here

Project documentation is available here

OpenStackClient

osc is a CLI for the OpenStack written in Rust. It is relying on the

corresponding openstack_sdk crate (library) and is generated using OpenAPI

specifications. That means that the maintenance effort for the tool is much

lower compared to the fully human written python-openstackclient. Due to the

fact of being auto-generated there are certain differences to the python cli

but also an enforced UX consistency.

NOTE: As a new tool it tries to solve some issues with the original

python-openstackclient. That means that it can not provide seamless migration

from one tool to another.

Commands implementation code is being produced by codegenerator what means only low maintenance is required for that code.

Features

-

Advanced authentication caching built-in and enabled by default

-

Status based resource coloring (resource list table rows are colored by the resource state)

-

Output configuration (using

$XDG_CONFIG_DIR/osc/config.yamlit is possible to configure which fields should be returned when listing resources to enable customization). -

Strict microversion binding for resource modification requests (instead of

openstack server create ...which will not work with all microversions you useosc compute server create290which will only work if server supports it. It is similar toopenstack --os-compute-api-version X.Y). It behaves the same on every cloud independent of which microversion this cloud supports (as long as it supports required microversion). -

Can be wonderfully combined with jq for ultimate control of the necessary data (

osc server list -o json | jq -r ".[].flavor.original_name") -

Output everything what cloud sent (

osc compute server list -o jsonto return fields that we never even knew about, but the cloud sent us). -

oscapi as an API wrapper allowing user to perform any direct API call specifying service type, url, method and payload. This can be used for example when certain resource is not currently implemented natively. -

osc auth with subcommands for dealing explicitly with authentication (showing current auth info, renewing auth, MFA/SSO support)

Microversions

Initially python-openstackclient was using lowest microversion unless

additional argument specifying microversion was passed. Later, during switching

commands towards using of the OpenStackSDK a highest possible microversion

started being used (again unless user explicitly requested microversion with

--XXX-api-version Y.Z). One common thing both approaches use is to give user

control over the version what is crucial to guarantee stability. The

disadvantage of both approaches is that they come with certain opinions that

does not necessarily match what user expects and make expectation on what will

happen hard. For the end user reading help page of the command is pretty

complex and error prone when certain parameters appear, disappear and re-appear

with different types between microversion. Implementing (and using) the command

is also both complex and error prone in this case.

osc is trying to get the best of 2 approaches and providing dedicated

commands for microversions (i.e. create20, create294). Latest microversion

command is always having a general alias (create in the above case) to let

user explicitly use latest microversion, what, however, does not guarantee it

can be invoked with requested parameters. This approach allows user to be very

explicit in the requirement and have a guarantee of the expected parameters.

When a newer microversion is required user should explicitly to do "migration"

step adapting the invocation to a newer set of parameters. Microversion (or

functionality) deprecation is also much simpler this way and is handled by

marking the whole command deprecated and/or drop it completely.

Request timing

osc supports --timing argument that enables capturing of all HTTP requests

and outputs timings grouped by URL (ignoring the query parameters) and method.

Connection configuration

osc tool supports connection configuration using the clouds.yaml files and

environment variables. In difference to the python-openstackclient no merging

of configuration file data with the environment variables is supported. Reason

for that is number of errors and unexpected behavior users are experiencing due

to that.

-

--os-cloud <CLOUD_NAME>command argument points to the connection configured in theclouds.yamlfile(s). -

$OS_CLOUDenvironment variable points to the configuration in theclouds.yamlfile -

--cloud-config-from-envflag directs cli to ignoreclouds.yamlconfiguration file completely and only rely on the environment variables (prefixed as usual withOS_prefix). -

--os-cloud-name <CLOUD_NAME>or$OS_CLOUD_NAMEenvironment variable uses the specified value as the reference connection name i.e when the authentication helper resolves the missing authentication parameters (like password or similar). -

With no cloud being specified in any of the above ways a fuzzy select with all cloud connections configured in the

clouds.yamlfile is used to get the user selection interactively. This will not happen in a non-interactive mode (i.e when stdin is not a terminal). -

--os-project-nameand--os-project-idcause the connection to be established with the regular credentials, but use the different project for the `scoped token.

Sometimes it may be undisired to keep secrets in clouds.yaml or secure.yaml

files. In the interactive mode such data is retrieved from the user via regular

prompts. It is possible to specify the path to the external executable command

that would be called with 2 parameters: the required attribute key (i.e.

password) and the connection name. The executable may be called multiple times

with different parameters when required. There is no support for passing all

required parameters in one invocation. This executable must not write anything

to the stdout except of the actual value. Whatever is written into the stderr

would be included in the osc log in the warning level. Please note that there

are no security guards possible for what the command actually performs. In

future pre-built with better security handling may be added (i.e. fetching

secrets from Vault, Bitwarden, etc).

#!/usr/bin/env bash

KEY=$1

CLOUD=$2

echo -e "Requested $KEY for $CLOUD" > &2

echo "a_super_secret"

Command interface

{{#cmd osc}}

CLI configuration

It is possible to configure different aspects of the OpenStackClient (not the

clouds connection credentials) using the configuration file

($XDG_CONFIG_DIR/osc/config.yaml).

This enables user to configurate which columns should be returned when no

corresponding run time arguments on a resource base.

views:

compute.server:

# Listing compute servers will only return ID, NAME and IMAGE columns unless `-o wide` or

`-f XXX` parameters are being passed

default_fields: [id, name, image]

fields:

- name: id

width: 38 # Set column width at fixed 38 chars

# min_width: 1 - Set minimal column width

# max_width: 1 - Set maximal column width

dns.zone/recordset:

# DNS zone recordsets are listed in the wide mode by default.

wide: true

fields:

- name: status

max_width: 15 # status column can be maximum 15 chars wide

The key of the views map is a resource key shared among all

openstack_rusttools and is built in the following form:

<SERVICE-TYPE>.<RESOURCE_NAME>[/<SUBRESOURCE_NAME>] where

<RESOURCE_NAME>[/<SUBRESOURCE_NAME> is a url based naming (for designate

/zone/<ID>/recordset/<RS_ID> would be names as zone.recordset and

/volumes/{volume_id}/metadatawould become volume.metadata. Please consult

Codegenerator

metadata

for known resource keys.

Resource view options

-

default_fields (list[str])

A list of fields to be displayed by default (in not wide mode). If not specified, only certain fields determined internally are displayed. Output columns are sorted in the order given in the list.

-

wide (bool)

If set to true, display all fields. If set to false, display only the default_fields.

-

fields (list[obj])

A list of column configuration. Consists of:

-

name (str) - field name (resource attribute name)

-

width (int) - column width in characters

-

min_width (int) - minimum column width in characters

-

max_width (int) - maximum column width in characters

-

json_pointer (str) - JSON pointer to the extract from the resource field. This is only applied in the list and not

widemode.

-

Hints

osc allows showing hints after the command output.

-

enable_hints (bool) - enable or disable hints. Is on by default.

-

hints (list) - list of general hints that are not command specific.

-

command_hints (dict) - dictionary of command specific hints. The key is the resource name. The value is a dictionary with the command name and the value is a list of hints.

All global hints are combined with the command specific hints and then a random hint is being selected to be shown.

Hints are not shows always. They are hidden between a random flag gate.

Text (Terminal) User Interface

Live navigating through OpenStack resources using CLI can be very cumbersome.

This is where ostui (a terminal user interface for OpenStack) rushes to help.

At the moment it is in a very early prototyping state but already saved my day

multiple times.

It is not trivial to implement UX with modification capabilities for OpenStack,

especially in the terminal this is even harder. Therefore primary goal of

ostui is to provide a fast navigation through existing resources eventually

with some operations on those.

Support for new resources is being permanently worked on (feel free to open issue asking for the desired resource/action or contribute with the implementation). For the moment documentation and implementation are not directly in sync therefore much more resources may be implemented than included in documentation.

Features

- switching clouds within tui session

- switching project scope without exploding

clouds.yaml - resource filtering

- navigation through dependent resources

- k9s similar interface with (hopefully) intuitive navigation

Installation

Compiled binaries are always uploaded as release artifacts for every

openstack_tui_vX.Y.Z tag. Binary for the desired platform can be downloaded

from GitHub directly or alternatively compiled locally when rust toolchain is

present.

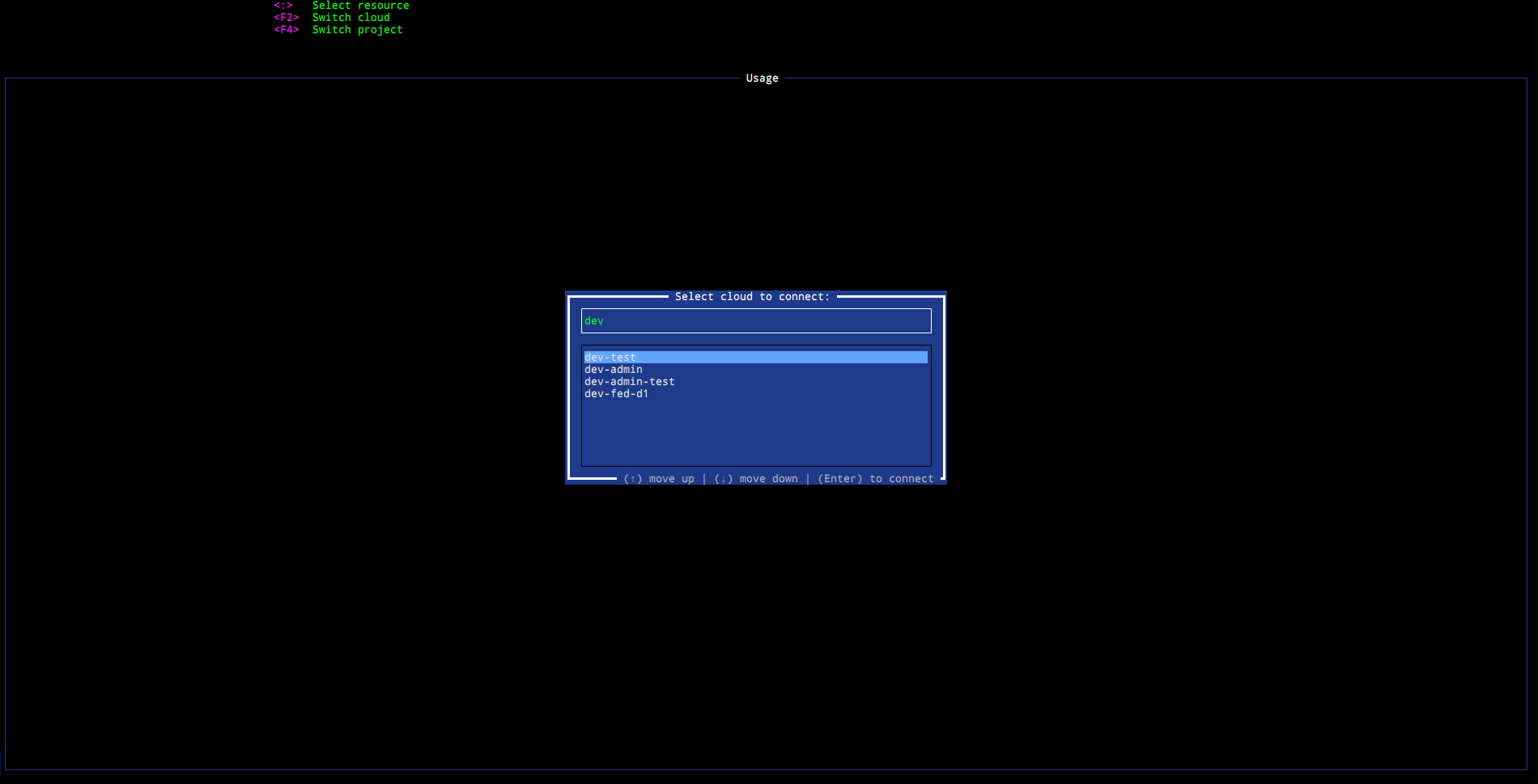

Cloud connection

ostui reads your clouds.yaml file. When started with --os-cloud devstack

argument connection to the specified cloud is being immediately established.

Otherwise a popup is opened offering connection to clouds configured in the

regular clouds.yaml file.

While staying in the TUI it is always possible to always switch the cloud by

pressing <F2>

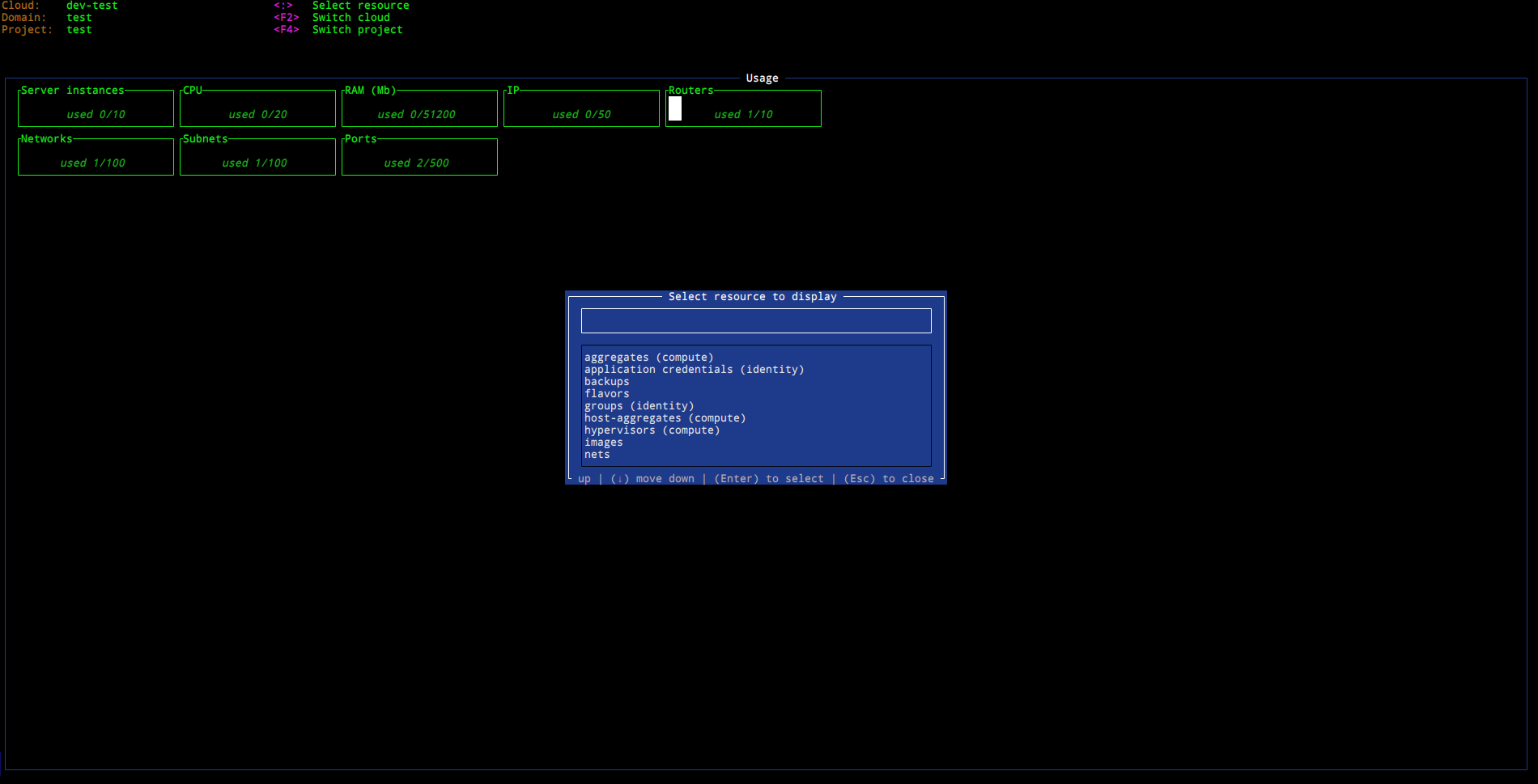

Resource selection

Picking up a desired resource is done triggering the ResourceSelect popup

which is by default invoked by pressing <:> (like in vim or k9s). The popup

displays a list with fuzzy search where user is able to select the necessary

resource using one of the pre-configured aliases.

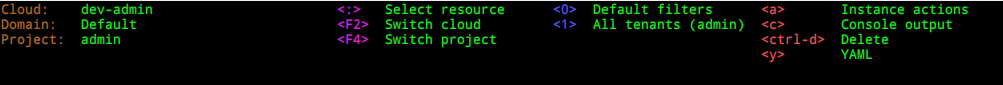

Header

In every mode TUI header show the context specific information. It is represented in few columns using different colors.

First column shows information about current connection

Second column shows selected global keybindings that are not context specific (a sort of magenta color).

Following columns are context specific and may be present or not.

Keybindings in blue color are used to filter results of the current view.

Red color is used by the keybindings as an action on the selected entry.

Note: colors are subjective and may be changed by the configuration file as well as altered by the terminal or display device.

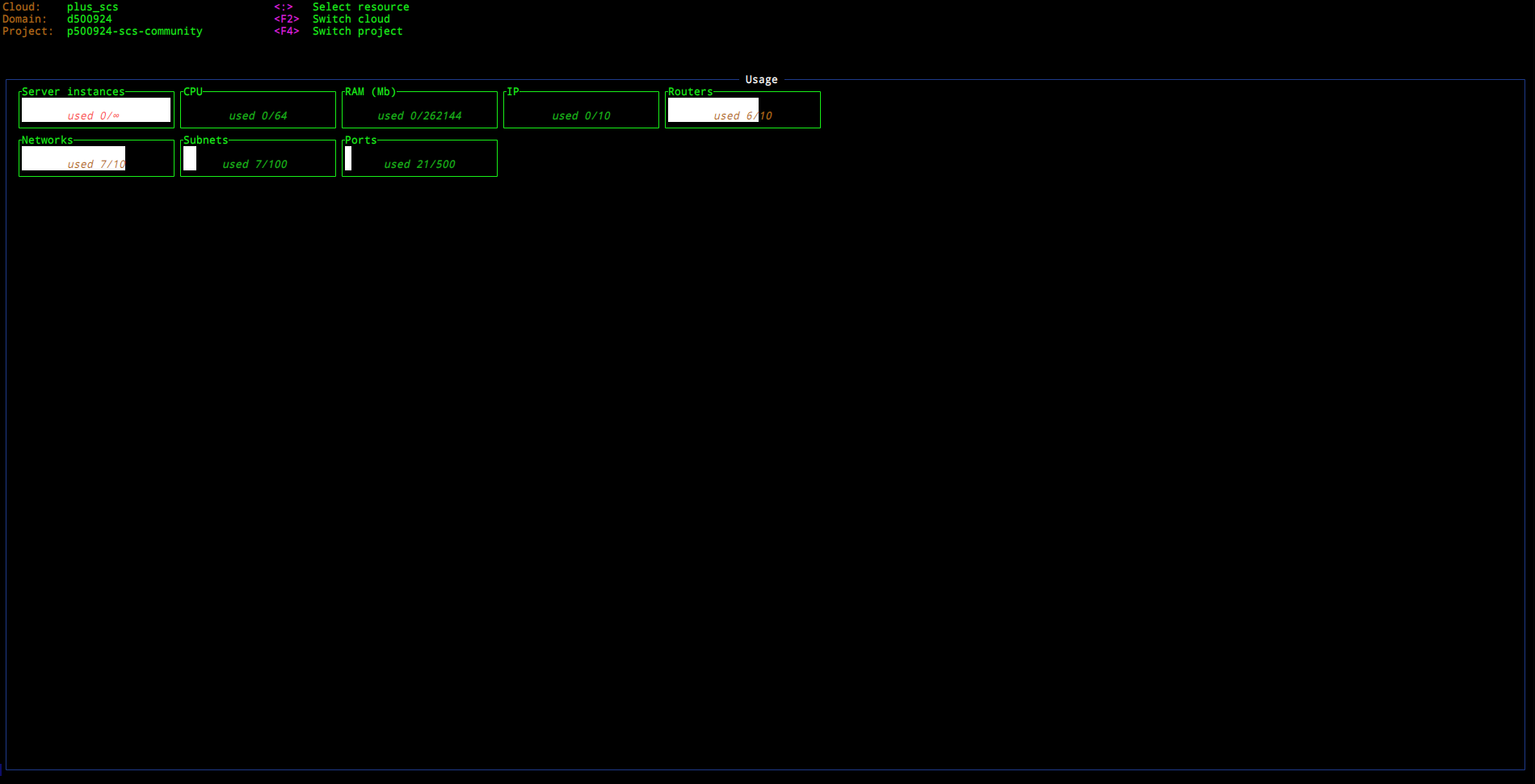

Home

A Home view serves an entry point for the cloud. It shows some service quotas

to have a better overview of currently available and provisioned resources.

Volumes

A Volumes view lists all block storage volume accessible in the current

project. Few additional filters are available to further fine-tune results

list.

Volume Snapshots

A VolumeSnapshots view lists all image snapshots in the current project. Few

additional filters are available to further fine-tune results list.

Volume Backups

A VolumeBackups view lists all block storage volume backups in the current

project. Few additional filters are available to further fine-tune results

list.

Compute Servers

A Servers view lists all servers in the current project. Few additional

filters are available (i.e. all_domains).

Currently it is possible also to list all instance actions performed on the

selected entry (by pressing <a> on the selected entry) as well as for the

selected instance actions list related events (by pressing <e>).

Flavors

A Flavors view lists all flavors accessible in the current project. Few

additional filters are available to further fine-tune results list.

It may be useful to list all VMs started with the selected flavor what is shown

after the user presses <s>.

Compute Aggregates

A ComputeAggregates view lists all aggregates configured in the cloud. Typically

this is only accessible for administrators.

Compute Hypervisors

A Hypervisors view lists all hypervisors configured in the cloud. Typically this is only accessible for administrators.

DNS Zones

This view lists all DNS zones.

Pressing <r> on the zone will switch to listing recorsets of the selected

zone.

DNS Recordsets

This view lists all DNS recordsets.

Identity Application Credentials

As a regular user ApplicationCredentials view shows application credentials

of the current user. As an admin or a user with privilege it is also possible

to show application credentials of a specific user from the Users view.

Identity Groups

By default all groups in the current domain are shown. When using admin connection this may differ, but in general results are equal to invocation of the CLI for the current connection.

Pressing <u> on the group will switch to listing users member of the selected

group.

Identity Users

By default all users in the current domain are shown. When using admin connection this may differ, but in general results are equal to invocation of the CLI for the current connection.

The view gives possibility to enable/disable current selected user as well as list user application credentials. More possibilities are going to be added in the future (i.e. changing user password, deletion, e-mail update, etc)

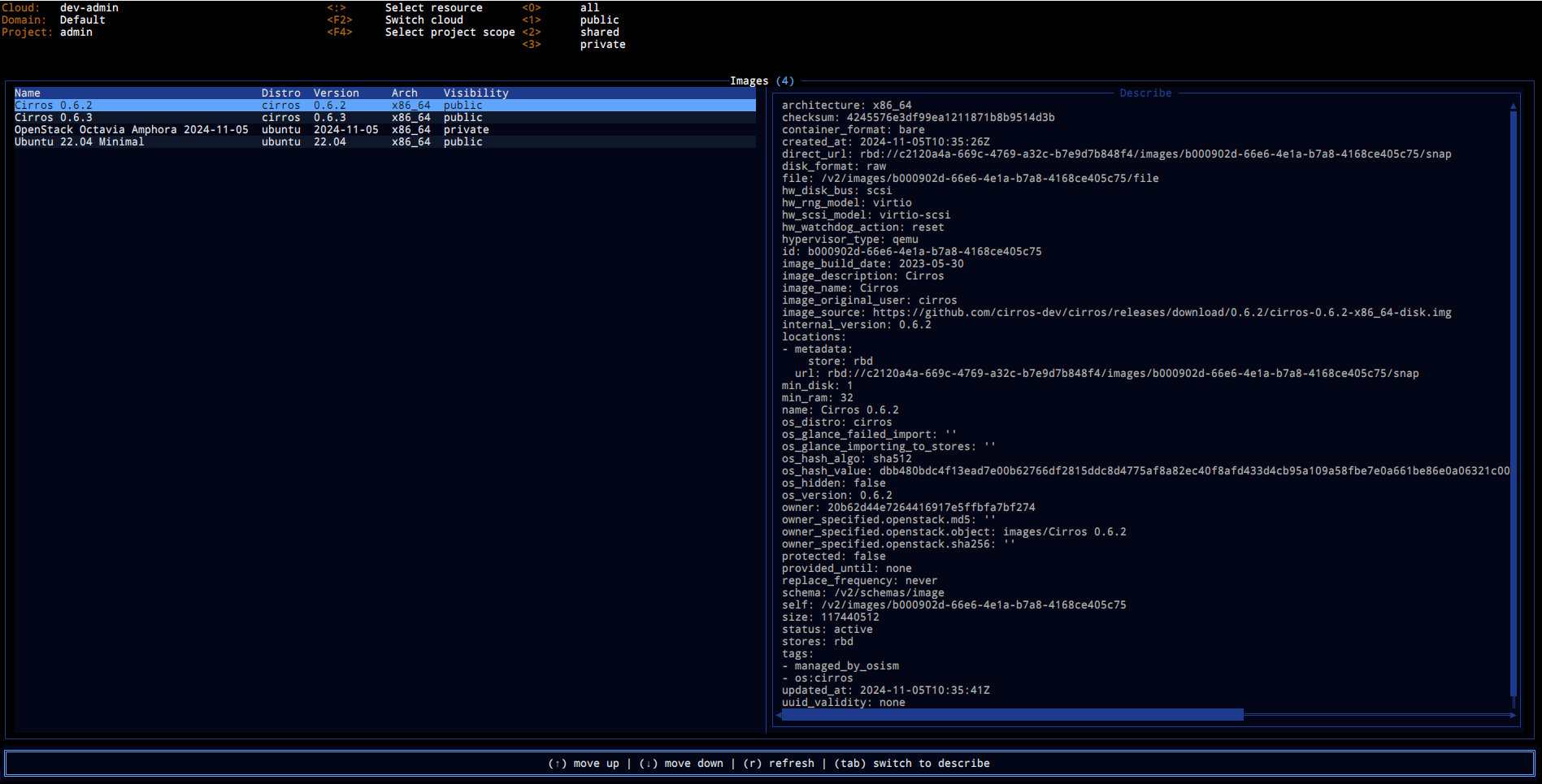

Images

A Images view lists all images accessible in the current project. Few

additional filters are available to further fine-tune results list.

Load Balancer

Load Balancer views allow traversing through the load-balancer (Octavia)

resources.

Networks

A Network view lists all networks in the current project. Pressing Enter of

a selected entry by default switches to the Subnet view with filter being set

to the currently selected network.

Subnetworks

A Subnet view lists all subnets in the current project.

Network Security Groups

A SecurityGroups view lists all security groups in the current project.

Pressing Enter of a selected entry by default switches to the

SecurityGroupRules view with filter being set to the currently selected

security group.

Network Security Group Rules

A SecurityGroupRules view lists all security group rules in the current project.

Configuration

Certain aspects of the TUI can be configured using configuration file.

Config file can be in the yaml or json format and placed under

XDG_CONFIG_HOME/openstack_tui named as config.yaml or views.yaml.

It is possible to split configuration parts into dediated files (i.e.

views.yaml for configuring views). Files are merged in no particular order

(in difference to the clouds.yaml/secure.yaml) so it is not possible to

predict the behavior when configuration option is being set in different files

with different value.

Default config

---

# Mode keybindings in the following form

# <Mode>:

# <shortcut>:

# action: <ACTION TO PERFORM>

# description: <DESCRIPTION USED IN TUI>

mode_keybindings:

Home: {}

# Block Storage views

BlockStorageBackups:

"y":

action: DescribeApiResponse

description: YAML

BlockStorageSnapshots:

"y":

action: DescribeApiResponse

description: YAML

BlockStorageVolumes:

"y":

action: DescribeApiResponse

description: YAML

"ctrl-d":

action: DeleteBlockStorageVolume

description: Delete

# Compute views

ComputeAggregates:

"y":

action: DescribeApiResponse

description: YAML

ComputeServers:

"y":

action: DescribeApiResponse

description: YAML

"0":

action:

SetComputeServerListFilters: {}

description: Default filters

type: Filter

"1":

action:

SetComputeServerListFilters: {"all_tenants": "true"}

description: All tenants (admin)

type: Filter

"ctrl-d":

action: DeleteComputeServer

description: Delete

"c":

action: ShowServerConsoleOutput

description: Console output

"a":

action: ShowComputeServerInstanceActions

description: Instance actions

ComputeServerInstanceActions:

"y":

action: DescribeApiResponse

description: YAML

"e":

action: ShowComputeServerInstanceActionEvents

description: Events

ComputeFlavors:

"y":

action: DescribeApiResponse

description: YAML

"s":

action: ShowComputeServersWithFlavor

description: Servers

ComputeHypervisors:

"y":

action: DescribeApiResponse

description: YAML

# DNS views

DnsRecordsets:

"y":

action: DescribeApiResponse

description: YAML

"0":

action:

SetDnsRecordsetListFilters: {}

description: Default filters

type: Filter

DnsZones:

"y":

action: DescribeApiResponse

description: YAML

"<enter>":

action: ShowDnsZoneRecordsets

description: Recordsets

"ctrl-d":

action: DeleteDnsZone

description: Delete

# Identity views

IdentityApplicationCredentials:

"y":

action: DescribeApiResponse

description: YAML

IdentityGroups:

"y":

action: DescribeApiResponse

description: YAML

"u":

action: ShowIdentityGroupUsers

description: Group users

"d":

action: IdentityGroupDelete

description: Delete (todo!)

"a":

action: IdentityGroupCreate

description: Create new group (todo!)

IdentityGroupUsers:

"y":

action: DescribeApiResponse

description: YAML

"a":

action: IdentityGroupUserAdd

description: Add new user into group (todo!)

"r":

action: IdentityGroupUserRemove

description: Remove user from group (todo!)

IdentityProjects:

"y":

action: DescribeApiResponse

description: YAML

"s":

action: SwitchToProject

description: Switch to project

IdentityUsers:

"y":

action: DescribeApiResponse

description: YAML

"ctrl-d":

action: IdentityUserDelete

description: Delete

"e":

action: IdentityUserFlipEnable

description: Enable/Disable user

"a":

action: IdentityUserCreate

description: Create new user (todo!)

"p":

action: IdentityUserSetPassword

description: Set user password (todo!)

"c":

action: ShowIdentityUserApplicationCredentials

description: Application credentials

# Image views

ImageImages:

"y":

action: DescribeApiResponse

description: YAML

"0":

action:

SetImageListFilters: {}

description: Default filters

type: Filter

"1":

action:

SetImageListFilters: {"visibility": "public"}

description: public

type: Filter

"2":

action:

SetImageListFilters: {"visibility": "shared"}

description: shared

type: Filter

"3":

action:

SetImageListFilters: {"visibility": "private"}

description: private

type: Filter

"ctrl-d":

action: DeleteImage

description: Delete

# LoadBalancer views

LoadBalancers:

"y":

action: DescribeApiResponse

description: YAML

"l":

action: ShowLoadBalancerListeners

description: Listeners

"p":

action: ShowLoadBalancerPools

description: Pools

LoadBalancerListeners:

"y":

action: DescribeApiResponse

description: YAML

LoadBalancerPools:

"y":

action: DescribeApiResponse

description: YAML

"<enter>":

action: ShowLoadBalancerPoolMembers

description: Members

"h":

action: ShowLoadBalancerPoolHealthMonitors

description: HealthMonitors

LoadBalancerPoolMembers:

"y":

action: DescribeApiResponse

description: YAML

LoadBalancerHealthMonitors:

"y":

action: DescribeApiResponse

description: YAML

# Network views

NetworkNetworks:

"y":

action: DescribeApiResponse

description: YAML

"<enter>":

action: ShowNetworkSubnets

description: Subnets

NetworkRouters:

"y":

action: DescribeApiResponse

description: YAML

NetworkSubnets:

"y":

action: DescribeApiResponse

description: YAML

"0":

action:

SetNetworkSubnetListFilters: {}

description: All

type: Filter

NetworkSecurityGroups:

"y":

action: DescribeApiResponse

description: YAML

"<enter>":

action: ShowNetworkSecurityGroupRules

description: Rules

NetworkSecurityGroupRules:

"y":

action: DescribeApiResponse

description: YAML

"0":

action:

SetNetworkSecurityGroupRuleListFilters: {}

description: All

type: Filter

"ctrl-n":

action: CreateNetworkSecurityGroupRule

description: Create

"ctrl-d":

action: DeleteNetworkSecurityGroupRule

description: Delete

# Global keybindings

# <KEYBINDING>:

# action: <ACTION>

# description: <TEXT>

global_keybindings:

"<q>":

action: Quit

description: Quit

"<Ctrl-c>":

action: Quit

description: Quit

"<Ctrl-z>":

action: Suspend

description: Suspend

"F1":

action:

Mode:

mode: Home

stack: false

description: Home

"F2":

action: CloudSelect

description: Select cloud

":":

action: ApiRequestSelect

description: Select resource

"<F4>":

action: SelectProject

description: Select project

"<ctrl-r>":

action: Refresh

description: Reload data

# Mode aliases

# <ALIAS>: <MODE>

mode_aliases:

"aggregates (compute)": "ComputeAggregates"

"application credentials (identity)": "IdentityApplicationCredentials"

"backups": "BlockStorageBackups"

"flavors": "ComputeFlavors"

"groups (identity)": "IdentityGroups"

"host-aggregates (compute)": "ComputeAggregates"

"hypervisors (compute)": "ComputeHypervisors"

"images": "ImageImages"

"loadbalancers": "LoadBalancers"

"lb (loadbalancers)": "LoadBalancers"

"listeners (loadbalancer)": "LoadBalancerListeners"

"lbl (loadbalancer listeners)": "LoadBalancerListeners"

"pool (loadbalancer)": "LoadBalancerPools"

"lbp (loadbalancer pools)": "LoadBalancerPools"

"healthmonitors (loadbalancer)": "LoadBalancerHealthMonitors"

"lbhm (loadbalancer health monitors)": "LoadBalancerHealthMonitors"

"nets": "NetworkNetworks"

"networks": "NetworkNetworks"

"projects": "IdentityProjects"

"recordsets (dns)": "DnsRecordsets"

"routers": "NetworkRouters"

"security groups (network)": "NetworkSecurityGroups"

"security group rules (network)": "NetworkSecurityGroupRules"

"servers": "ComputeServers"

"sg": "NetworkSecurityGroups"

"sgr": "NetworkSecurityGroupRules"

"snapshots": "BlockStorageSnapshots"

"subnets (network)": "NetworkSubnets"

"volumes": "BlockStorageVolumes"

"users": "IdentityUsers"

"zones (dns)": "DnsZones"

# View output

# <RESOURCE_KEY>:

# fields: <ARRAY OF COLUMNS TO SHOW>

# wide: true

views:

# Block Storage

block_storage.backup:

default_fields: [id, name, az, size, status, created_at]

block_storage.snapshots:

default_fields: [id, name, status, created_at]

block_storage.volume:

default_fields: [id, name, az, size, status, updated_at]

# compute

compute.aggregate:

default_fields: [name, uuid, az, updated_at]

compute.flavor:

default_fields: [id, name, vcpus, ram, disk, swap]

compute.hypervisor:

default_fields: [ip, hostname, status, state]

compute.server/instance_action/event:

default_fields: [event, result, start_time, finish_time, host]

compute.server/instance_action:

default_fields: [id, action, message, start_time, user_id]

compute.server:

default_fields: [id, name, flavor, status, created, updated]

fields:

- name: flavor

json_pointer: "/original_name"

# dns

dns.recordset:

default_fields: [name, status, type, created, updated]

dns.zone:

default_fields: [name, status, type, email, created, updated]

# identity

identity.group:

default_fields: [id, name, domain, description]

identity.project:

default_fields: [id, name, parent_id, enabled, domain_id]

identity.user/application_credential:

default_fields: [id, name, expires_at, unrestricted, description]

identity.user:

default_fields: [name, enabled, password_expires_at]

# image

image.image:

default_fields: [id, name, distro, version, visibility, min_disk, min_ram]

# load balancer

load-balancer.healthmonitor:

default_fields: [id, name, status, type]

load-balancer.listener:

default_fields: [id, name, status, protocol, port]

load-balancer.loadbalancer:

default_fields: [id, name, status, address]

load-balancer.pool/member:

default_fields: [id, name, status, port]

load-balancer.pool:

default_fields: [id, name, status, protocol]

# network

network.network:

default_fields: [id, name, status, description, created_at, updated_at]

network.router:

default_fields: [id, name, status, description, created_at, updated_at]

network.subnet:

default_fields: [id, name, cidr, description, created_at]

network.security_group_rule:

default_fields: [id, ethertype, direction, protocol, port_range_min, port_range_max, description]

network.security_group:

default_fields: [id, name, description, created_at, updated_at, description]

Views configuration

Every resource view can be configured in a separate section of the config.

Resource key in a form fields is an array of field names to be

populated. All column names are forcibly converted to the UPPER CASE.

Resource view options

-

default_fields (list[str])

A list of fields to be displayed by default (in not wide mode). If not specified, only certain fields determined internally are displayed. Output columns are sorted in the order given in the list.

-

wide (bool)

If set to true, display all fields. If set to false, display only the default_fields.

-

fields (list[obj])

A list of column configuration. Consists of:

-

name (str) - field name (resource attribute name)

-

width (int) - column width in characters

-

min_width (int) - minimum column width in characters

-

max_width (int) - maximum column width in characters

-

json_pointer (str) - JSON pointer to the extract from the resource field. This is only applied in the list and not

widemode.

-

Possible errors

This chapter describes potential errors that can occur due to misconfiguration either on the user side or on the provider side.

Service catalog and version discovery

It could happen, that the URL contained in the service endpoint version

document point to the URL that is either malformed or is simply wrong (i.e.

returning 404). While the SDK may be able to cope with certain errors not

always the result is going to be correct. This type of errors must be fixed by

the cloud provider. User should be able to set the

<SERVICE>_endpoint_override in the clouds.yaml to temporarily workaround

issues.

Invalid port

The host port must be a valid port.

Absolute path

The URL must be an absolute path. Official procedure of the version discovery supports use of the relative URLs, but this is not used by the official services and should never happen.

Format

The URL must match the following regular expression:

"^(?<scheme>.+)://(?<host>[^:]+):(?<port>[^/]+)/(?<path>.*)$

Unreachable url

The URL in the version document must be a working url.

-

https://example.com//v2 -

http://localhost:8080/invalid_prefix/v2

SDK ignores the information as if at the attempted url nothing usable was found at all. Reason for that is that it indicates major misconfiguration on the provider side and most likely all other links in the API would be broken as well (pagination, self links, etc).

Version url cannot be a base

The URL must be a valid URI.

Wrong:

ftp://rms@example.comunix:/run/foo.socketdata:text/plain,Stuff

Discovered URL has different prefix

Under some misconfiguration circumstances the discovery document may point to a

pretty different location compared to the place where the discovery document

itself has been found (i.e. discover points to http://localhost/v2.1 while

the document itself has been found at http://localhost/prefix/v2.1). This is

a service misconfiguration that need to be addressed by the cloud provider. In

case of errors on the client side configuring <SERVICE>_endpoint_override may

or may not help.

It is not possible to simply validate discovered url because some services are not implementing discovery properly. Due to that fact SDK cannot do anything else than just note a warning.